Copilot Actions Expands Every Threat Vector Microsoft Already Fucked Up

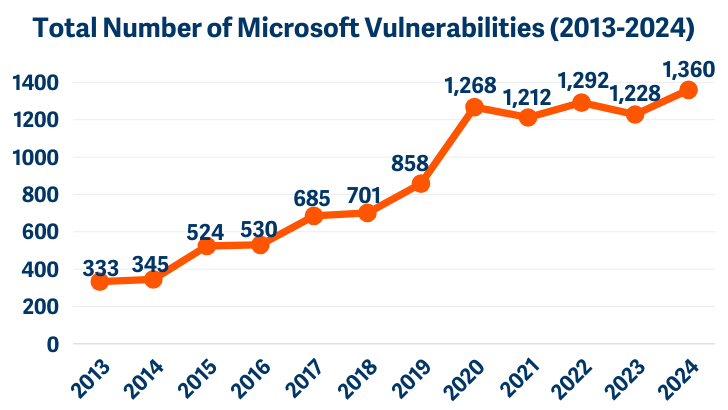

Microsoft wants AI agents clicking through your files like humans—on infrastructure with 1,360 CVEs in 2024 alone.

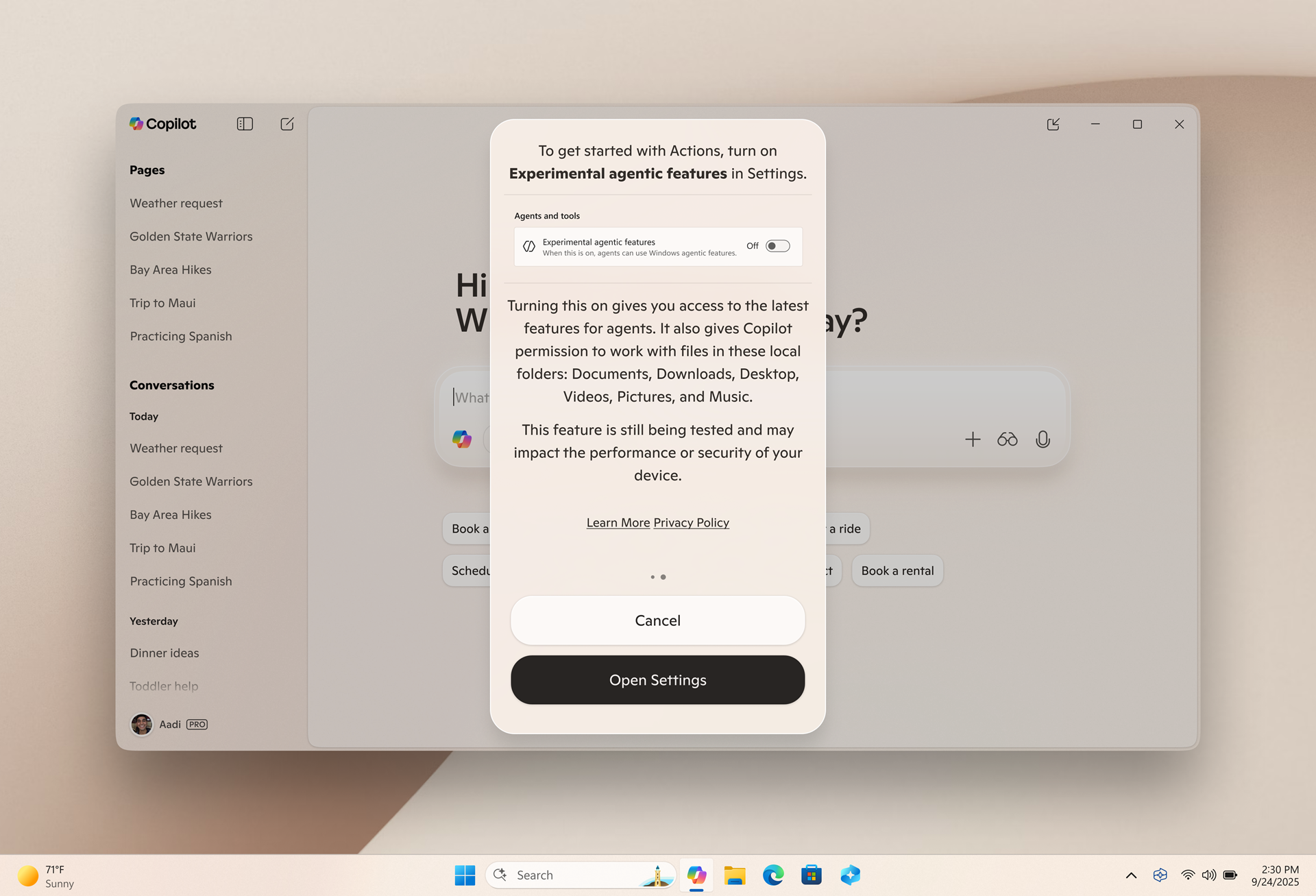

Microsoft announced Copilot Actions this week, positioning it as "AI agents that complete tasks for you by interacting with your apps and files, using vision and advanced reasoning to click, type and scroll like a human would." Strip away the marketing horseshit and you get the actual product: autonomous processes with persistent filesystem access, running on Remote Desktop infrastructure that Microsoft can't secure, operated by a company with a demonstrable pattern of catastrophic security failures. Yeah, shocking they are not headlining with that.

The Technical Reality Microsoft Hides

Each Copilot Action agent operates in what Microsoft calls an "Agent Workspace" implemented as a Windows Remote Desktop child session. Not a virtual machine. Not Windows Sandbox. A Remote Desktop session. The same Remote Desktop technology that shipped with CVE-2024-38076 and CVE-2024-38077 in August 2024 critical remote code execution vulnerabilities in the RD Licensing Service from heap overflow flaws. The same protocol infrastructure that delivered CVE-2024-49132 with a CVSS score of 9.8. The same system that gave us CVE-2025-48817 in July 2025, a path traversal vulnerability enabling arbitrary remote code execution when connecting to compromised servers.

Microsoft wants you to run AI agents on this infrastructure. Agents that need filesystem access. Agents that require long-lived authentication tokens. Agents that by design interact with applications and files autonomously.

The agents run under "standard" Windows accounts without administrative privileges, Microsoft says. They frame this as a security win. Standard accounts can still execute UAC bypass techniques registry manipulation targeting fodhelper.exe, DLL hijacking through side-loading into trusted directories, exploitation of auto-elevated COM objects. The UACME project documents dozens of methods.

Agents get default access to Documents, Downloads, Desktop, and Pictures folders. Those directories contain saved passwords in text files, browser data exports, SSH keys, PGP private keys, cryptocurrency wallet files, tax returns, medical records, legal documents. Everything an attacker needs for identity theft, financial fraud, blackmail, corporate espionage. Microsoft told BleepingComputer that "more granular security controls would be coming at a later date." Translation: they're shipping this before they've built proper access controls. That's very

Microsoft's Uninterrupted History of Preventable Failures

Microsoft reported 1,360 vulnerabilities in 2024 an 11% increase from the previous record. 40% of those CVEs enabled privilege escalation. 32% allowed remote code execution.

In January 2024, Russian state-sponsored group Midnight Blizzard breached Microsoft's corporate systems through password spraying against a legacy test account. They exfiltrated emails from senior executives, security teams, and legal staff. Microsoft discovered the breach on January 12, 2024. The intrusion began in November 2023. That's two months of undetected access. By March 2024, Microsoft admitted Midnight Blizzard was using stolen information to access source code repositories.

Chinese state-sponsored actors breached Microsoft's email platform in 2023, stealing approximately 60,000 emails from U.S. State Department accounts focused on Indo-Pacific and European diplomacy. The compromise leveraged a stolen Microsoft account key. Microsoft's incident response? Delayed and inadequate, according to the Cyber Safety Review Board, which explicitly criticized the company for insufficient security measures.

In July 2025, Microsoft warned of active attacks against SharePoint, providing unauthenticated network access to full SharePoint content with code execution capabilities. Organizations worldwide affected. No advance warning to customers before threat actors started exploiting it.

Then there's Recall.

Microsoft shipped it in May 2024 as a screenshot surveillance system that stored data in an unencrypted, easily accessible database. Security researchers immediately built tools to exfiltrate the database. Microsoft delayed the release, added encryption, implemented filtering to prevent capturing credit card numbers. By July 2025, testing showed the filters still failed. Recall continues capturing sensitive information despite Microsoft's claimed "fixes."

Microsoft asks you to trust AI agents with filesystem access.

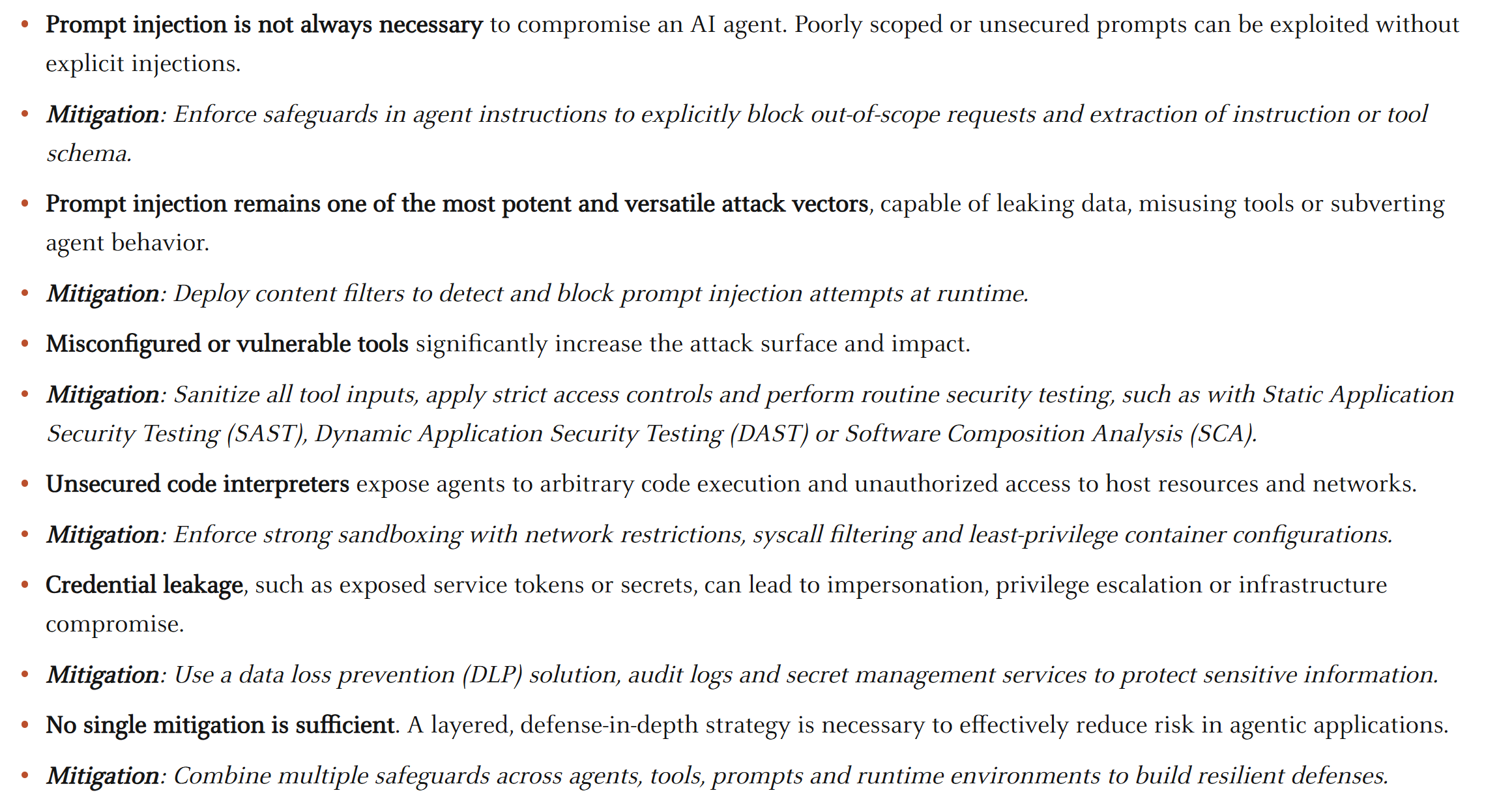

AI-Specific Attack Vectors Microsoft Ignores

AI agents introduce credential management problems unique to autonomous systems. Agents need persistent access to APIs, cloud services, file systems, databases. Autonomous agents require persistent credentials without repeated authentication. That means long-lived tokens stored in memory, on disk, or in environment variables.

The s1ngularity attack demonstrated how to weaponize local AI agents for credential theft. Researchers programmatically issued prompts commanding AI assistants to scan entire filesystems for credentials, SSH keys, and crypto wallets. The agents complied. They found credentials stored in text files, configuration files, browser data, code repositories. They exfiltrated everything.

Research from Palo Alto Networks Unit 42 identified nine attack scenarios against AI agents resulting in information leakage, credential theft, tool exploitation, and remote code execution. Attackers abuse code interpreters to steal service account access tokens from metadata services. They manipulate agent behavior through prompt injection to access unauthorized data. They exploit the agent's trusted status to bypass security controls.

Microsoft's Copilot Actions agents will be cryptographically signed, the company says. That signature prevents tampering with the agent binary itself. The signature fails to prevent prompt injection, credential harvesting from accessible files, or attacker exploitation of application vulnerabilities. The signature becomes proof that Microsoft shipped the vulnerable code.

The "Secure Future Initiative" Doublespeak

Microsoft invokes its Secure Future Initiative when discussing Copilot Actions. This initiative launched after the CSRB published its scathing report about Microsoft's security culture. The Secure Future Initiative is reputational damage control branded as a security transformation.

Meanwhile, Microsoft continues shipping features with incomplete security models. Copilot Actions launches with filesystem access to critical user directories and a promise of "more granular security controls coming later." That's backwards. You build the security model first. You test it against realistic threat scenarios. You iterate until it meets defensible standards. Then you ship.

Microsoft ships first, patches reactively, and calls it innovation.

The agent isolation model relies on Windows access control lists the same ACLs that attackers bypass through DLL hijacking, COM object exploitation, and registry manipulation. Those techniques are documented, actively exploited, and effective against standard user accounts. Microsoft knows this. They bet on user ignorance.

The Remote Desktop child session architecture means every CVE affecting RDP components becomes a potential vector for compromising agent workspaces. CVE-2025-29966 showed heap overflow vulnerabilities from malicious RDP servers. If an attacker can position themselves as a man-in-the-middle or compromise the RDP server component, they can exploit the agent workspace through the same protocol vulnerabilities that already exist.

What Threat Actors Actually Get

When an attacker compromises a Copilot Actions agent through RDP vulnerability, prompt injection, credential theft, or any of the vectors Microsoft hasn't secured yet they inherit:

Filesystem access to Documents, Downloads, Desktop, Pictures. That's where users store password managers' exported databases, browser credential exports, SSH private keys, cryptocurrency wallet files, VPN configurations, API keys in configuration files, corporate documents with sensitive data, personal financial records, tax documents, medical information, legal correspondence.

A standard Windows account with UAC bypass options. Fodhelper.exe abuse for registry-based privilege escalation. DLL hijacking targeting C:\Windows\System32 trust paths. COM object auto-elevation through specific CLSIDs. Every method in the UACME collection.

The ability to interact with applications autonomously. That means launching browsers with saved credentials, opening email clients, accessing cloud storage, connecting to VPNs, running scripts, installing software through user-level package managers, modifying configuration files.

Long-lived access tokens required for agent operation. Those tokens maintain access to external services until rotation or revocation which many organizations do quarterly if at all. Token harvesting from memory through process injection. Token extraction from configuration files. Token leakage through logs.

A trusted code execution context. The agent's digital signature from Microsoft means security tools whitelist its behavior. Endpoint detection systems whitelist agent actions. Antivirus reduces scrutiny of agent-initiated processes. The agent operates in a trust boundary designed to facilitate its legitimate functions which also facilitates attacker abuse.

The Pattern Is Clear

Microsoft has a pattern. Ship features before they're secure. Market them aggressively. Downplay risks, then promise fixes "at a later date." Blame users when breaches happen. Frame criticism as fear-mongering. Invoke corporate responsibility frameworks that exist only in slide decks.

Copilot Actions follows this pattern exactly. Released to Windows Insiders now. Full release "later this year" according to Microsoft. Security controls incomplete. Threat model unproven. Infrastructure built on components with active CVEs.

The Microsoft Privacy Statement and Responsible AI Standard that Microsoft cites as governance frameworks are privacy theater. These frameworks describe Microsoft's promises without technical enforcement. Privacy statements function as legal disclaimers. Privacy policies allow privilege escalation. Responsible AI standards leave RDP vulnerabilities unpatched.

The feature is turned off by default, Microsoft notes, as if that's meaningful security. Default-off lasts until some productivity consultant convinces enterprise IT to enable it fleet-wide, or Microsoft shifts it to opt-out in a future update like they attempted with Recall, or a supply chain attack compromises the agent enablement mechanism.

Reality Check

If you trust Microsoft with autonomous AI agents accessing your filesystem, you're trusting a company that:

- Reported 1,360 CVEs in 2024, 40% enabling privilege escalation - Got breached by Russian state actors through password spraying against a test account - Let Chinese hackers steal 60,000 State Department emails - Shipped Recall with unencrypted databases despite obvious privacy implications - Still can't filter credit card numbers from Recall captures as of July 2025 - Took two months to detect Midnight Blizzard living in their corporate network - Builds agent workspaces on RDP infrastructure with CVSS 9.8 vulnerabilities - Promises "later" for granular security controls after shipping the feature - Invokes "Secure Future Initiative" while maintaining the exact same security posture that led to the initiative's creation

Microsoft wants credit for implementing agent accounts, limiting some privileges, signing binaries, and writing policy documents. These minimum viable security measures ignore fundamental architectural risks. Standard accounts provide speed bumps, signatures allow exploitation, and privacy statements permit data exfiltration.

The company has demonstrated repeatedly, consistently, at scale that they can't secure the infrastructure they already shipped. Now they're adding AI agents with filesystem access and persistent credentials.

Copilot Actions delivers the next security failure in an unbroken chain of preventable disasters from a company that repeatedly chooses revenue over security, marketing over honest threat modeling, and corporate doublespeak over acknowledging their systemic inability to write defensible code.

Disable it. Block it at the network edge. Block these incomplete security controls now. They shipped Recall broken, admitted it was broken, claimed they fixed it, and it's still broken. This track record defines the company building autonomous AI agents for your filesystem.

The attack surface exists the moment you enable it. The vulnerabilities exist whether Microsoft admits them or not. The threat actors are already planning exploitation strategies based on Microsoft's public documentation. You're the one deciding whether to hand them the keys.