California Forces Police to Disclose AI-Written Reports

California just mandated that police must disclose when artificial intelligence writes their reports, and vendors are banned from selling the data they collect. (and why it doesn't matter)

Imagine you're so lazy at your job that you feed everything to ChatGPT instead of doing the work. Now imagine that output isn't organizing spreadsheets it's putting someone in prison for 30 years. That's what police departments have been doing with AI-generated reports. The fact they thought this was acceptable reveals exactly where their priorities sit.

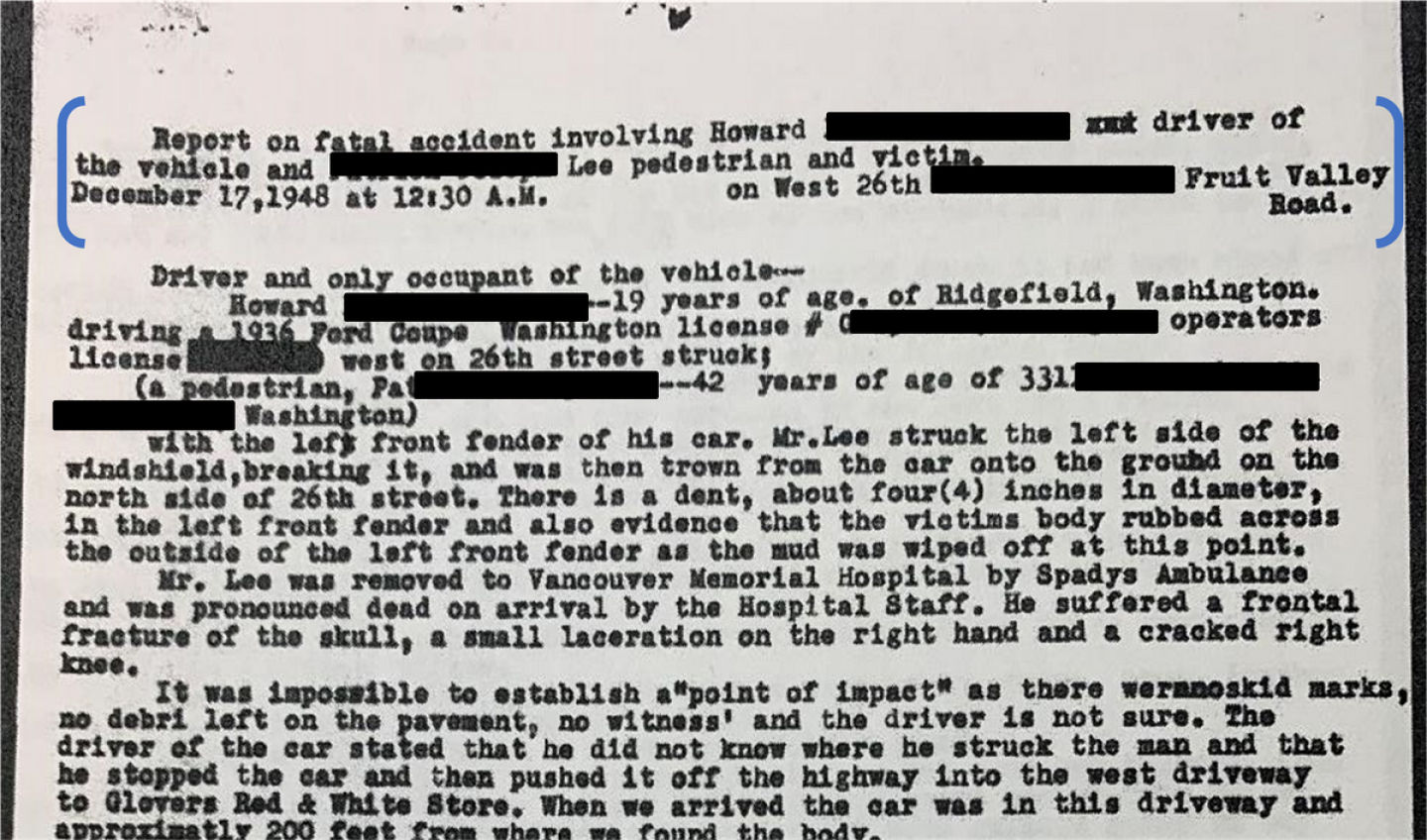

California, a state that usually excels at backwards legislation, just closed a dangerous accountability gap (kind of). Police across California have been feeding body camera footage and incident notes into AI systems that write official reports, because reading and writing is hard. Those reports go to prosecutors, judges, defense attorneys. They determine charges, case outcomes, sentencing. Until October 2025, nobody had to tell you a machine wrote them.

S.B. 524 changes that. Police must disclose AI involvement in the reports themselves. Not in internal memos or buried on department websites. In the actual documents presented to courts. If an algorithm touched it, the report must say so.

The law bans vendors from selling or sharing information police provide to AI systems (LOL). Body camera footage, audio recordings, witness statements, location data all are all "off-limits for monetization", and of course they will respect that (sarcasim).

Matthew Guariglia at the Electronic Frontier Foundation analyzed the bill's passage. EFF backed it because you cannot challenge evidence you don't know exists. If a report presented as an officer's firsthand account came from AI parsing footage, that changes everything about accuracy, completeness, and reliability.

Defense attorneys need to know if they're reading human observations or a language model's interpretation. Judges need to know if probable cause statements reflect officer judgment or algorithmic pattern matching. The public needs to know if reports shaping policy were written by accountable humans or software nobody can cross-examine.

Departments must retain all drafts showing AI and human contributions. Axon dominates the police body camera market. Their product Draft One generates reports from footage but doesn't track edit history distinguishing AI text from human edits. Draft One must change or departments using it violate California law.

Retention requirements create auditable records. When someone challenges a report, investigators can trace which parts came from AI versus which parts an officer modified. Without that record, you cannot assess whether AI introduced errors, whether the officer corrected them, or whether the officer made output worse by removing AI-generated caveats.

Police reports carry institutional weight because we assume they come from trained observers with legal accountability.

AI has neither.

It generates text optimized for coherence, not accuracy. When an officer signs an AI-generated report, who's responsible for the parts they didn't write and might not have read?

Some departments will argue this creates extra paperwork. A single sentence identifying AI-generated sections satisfies the requirement. Departments already using these tools generate reports anyway. Adding attribution is trivial compared to the accountability it enables.

The law applies to all California police agencies. City police, county sheriffs, state law enforcement. No exemptions for federal partnerships, joint task forces, or "national security" carve-outs. Vendors cannot monetize the data. Information fed to AI systems stays confined to the specific report requested.

Now for the question nobody's asking out loud: Will police actually comply?

History says no.

Police departments have decades of practice ignoring transparency laws with zero consequences.

Minneapolis Police Department: Department of Justice investigation found shootings, beatings, and abuse routinely captured on body cameras. The department never released footage. Officers faced no punishment.

Memphis street crimes unit, officers wore body cameras while systematically abusing residents there were zero consequences.

New York City has had officers violate body camera policies repeatedly. Civilian oversight board recommended discipline. Police commissioner ignored every recommendation. Officers kept their jobs.

Maryland, 2023: Montgomery County detective interviewed sexual assault victim and parents. "Keystroke error" deleted all recordings. Case dismissed. Detective never charged with evidence destruction. Would YOU be if you did something like this? (Yes is the answer)

In Pennsylvania, in 2024 Courts ruled defendants must prove "bad faith" before police face consequences for destroying evidence. Proving intentional destruction versus "accident" is nearly impossible. Officers understand this, Moreover a prisoner sitting in a cell with no resources including a phone and in some counties access to a book (Essex County in MA, I'm looking at you), are not going to have the ability to actually challenge this at any meaningful level whatsoever.

Twenty-five states have fines for violating transparency laws. Maximum penalties: $100 to $1,000. Kansas Department of Transportation violated open records law with no reasonable basis. Court ordered them to pay a fraction of the newspaper's legal fees. Even if a Police Department is actually held liable by some miracle do you know where that money will come from? It'll come from the same place that the money comes from when they get sued, you, the taxpayer.

Federal agencies routinely refuse FOIA requests for basic guidance documents. Customs and Border Protection among the worst offenders. Requests go unanswered with no penalties or enforcement, Despite the fact that all these agencies are in essence public servants as we the people fund them.

S.B. 524 has the same enforcement problem. What happens when departments ignore it? Who checks? Who enforces?

The bill contains zero penalty provisions. No fines, criminal charges, in fact no enforcement mechanism at all. Police departments violating disclosure requirements face no consequences beyond potential case dismissal years later, if defense counsel catches it, And can actually substantiate it at that point.

Even those writing laws regulating technology don't understand the technology. Five days before the EFF article, U.S. Magistrate Judge Ona Wang reversed her own order from May 2025 requiring OpenAI to preserve all ChatGPT conversations indefinitely. She initially mandated retention of conversations permanently. Five months later, she lifted the order after realizing the operational absurdity. Can you imagine being able to make a legally enforceable court order but you're so dumb that you don't even understand what it means operationally? These are what federal judges are like. Now imagine that same person deciding whether or not you get one year in prison or 50.

If federal judges can't grasp the implications of technology they're regulating in active litigation, legislators writing broad policy stand no chance. S.B. 524 mandates disclosure without defining penalties because the people writing it didn't consider enforcement mechanisms, or knew that they would be exempt from this anyways. They assumed compliance, Because when you live in an ivory tower it actually exists.

Police don't assume compliance but much of the time, instead take impunity. And S.B. 524's complete absence of penalties confirms they're correct.

Governments demand transparency from citizens while operating behind walls. When a government document affects someone's liberty, that person deserves to know how it was created. Yet here we are, needing toothless legislation to state the obvious. To me this just smells like a way to shut people up about this actual issue. A good course of action would be create a shirt that has QR code, That says innocent whenever you commit a crime. Or "my name is mike smith". It's ridiculous comparison but the person actually writing the police report will know what that QR code says without having to look it up I bet.

California is not perfect on surveillance, In fact on my Youtube channel I've documented them actually allowing utility companies and law enforcement to work in compliance to spy on their citizens and extort them. License plate readers proliferate unchecked. Gang databases operate with minimal oversight. Fusion centers share information with federal agencies subject to even fewer restrictions. But S.B. 524 is a legitimate win, In the eyes of many because it creates transparency requirements and prevents commercial exploitation of law enforcement data.

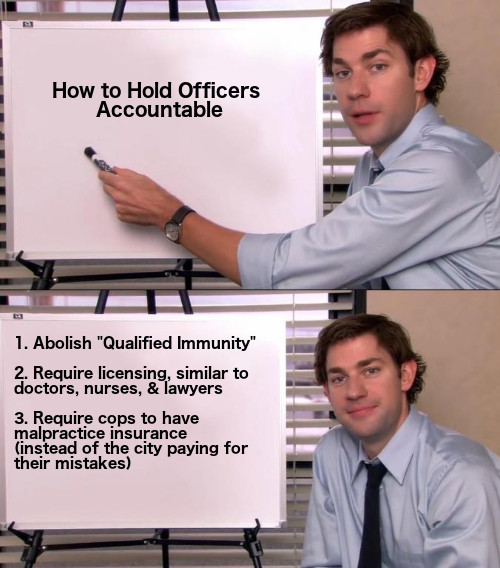

Axon announced Draft One in April 2024. Within months, departments nationwide were testing it. By the time legislatures noticed, the technology had embedded itself in workflows. Other states should copy California's model before AI report-writing becomes standard practice everywhere, However when they do they should call for an outright ban on it as well as give an exception to qualified immunity in the states that still allow that practice for law enforcement. This would make it so the officer who actually violates it is personally liable instead of the department being liable and therefore the taxpayer.

The law takes effect immediately. Defense attorneys can demand disclosure and draft histories now, but good luck. In this case they are 100% depending on The honesty of the individual who submitted the report.

OPSEC implications: If police generate a report about you, that report may contain AI-generated content. Disclosure requirements mean you can find out. Draft retention means you can verify what AI produced versus what the officer changed. If the final report includes AI hallucinations or omits AI-generated context, you have evidence.

Discovery requests for AI-generated reports should include: original footage/audio the AI analyzed, AI's initial output, all subsequent drafts, documentation of who made which edits. If departments cannot produce that record, the report's reliability collapses. If they produce it showing significant divergence between AI output and final report, you have grounds to challenge the officer's edits. In addition this also opens up providers because if a model is closed source how can anyone actually verify the process by which it describes a given situation?

The law ignores predictive policing (law enforcement is supposed to be reactionary, not proactive, Meaning you shouldn't be arrested for getting mad because it could lead to a murder), facial recognition, and other AI applications. It's narrowly focused on report writing. It establishes a weak principle: when AI affects people's rights and lives, those people have a right to know. Police have violated rights for decades with impunity. Whether they'll actually comply with this law remains an open question, only to those who remain seated in ivory towers.

Credit to Matthew Guariglia and the Electronic Frontier Foundation. EFF doesn't have the lobbying budget of police unions or tech companies. They win by building coalitions, documenting harms, and making the case that civil liberties aren't negotiable. This law exists because they forced it to exist, which at the day is difficult to do to say the least. Them getting this is badass, even if it's a toothless law.

California police are now supposed to be honest about when machines write their reports. Vendors are supposed to treat law enforcement data as something other than a revenue stream. Defense attorneys have a new tool to challenge reports that were always questionable but never labeled as AI-generated.

Whether those improvements matter depends entirely on enforcement.

Watch how this plays out. Some departments will comply. Most will interpret disclosure requirements narrowly footnotes, vague language, technical jargon obscuring how much AI generated the report. Vendors will lobby for weakening amendments. Departments will adopt tools hosted outside California to evade the data-sharing ban. Police unions will challenge penalties. Prosecutors will minimize violations during trials.

The pattern is established. Minneapolis captured abuse on cameras, never released footage, punished nobody. Maryland detective deleted sexual assault recordings, faced no charges. Federal agencies ignore FOIA requests with zero consequences. Twenty-five states have transparency law penalties maxing out at $1,000 joke fines for million-dollar budgets.

S.B. 524 sets a standard. Police must disclose AI authorship. Vendors cannot monetize law enforcement data. Those requirements are now law in the largest state in the country, covering thousands of agencies and millions of interactions per year.

Enforcement will determine whether that standard means anything or becomes another law police ignore because they've learned they can.